The Quantum Chromodynamics Nuclear Tomography (QuantOm) Collaboration brings together theoretical and experimental physicists, applied mathematicians, data scientists, and computer scientists with a common goal: to understand the fundamental nature of visible matter.

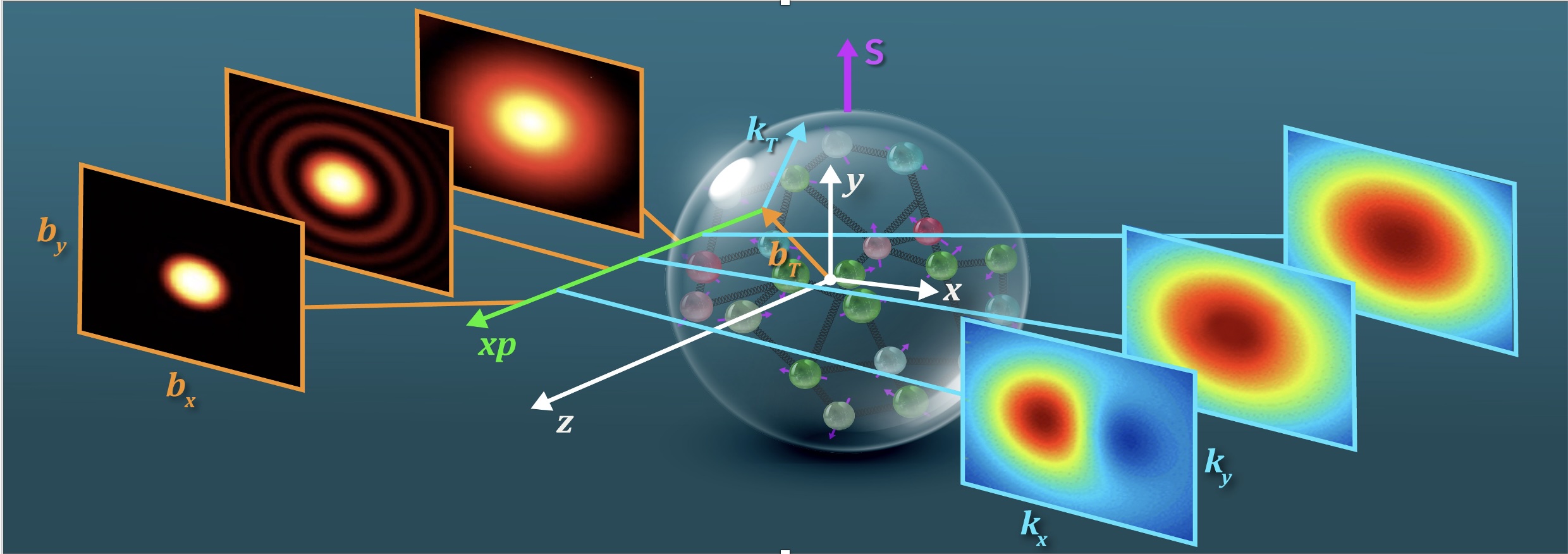

Our mission is to reconstruct three-dimensional images of the fundamental particles—quarks and gluons—that form the femtometer-scale structure of visible matter—that is, atomic nuclei and their proton and neutron building blocks. The binding of quarks and gluons into protons and neutrons generates nearly all the mass of the visible universe. This mass arises from a sophisticated dance of quantum interactions which are described by quantum chromodynamics (QCD), the fundamental theory of the strong nuclear force.

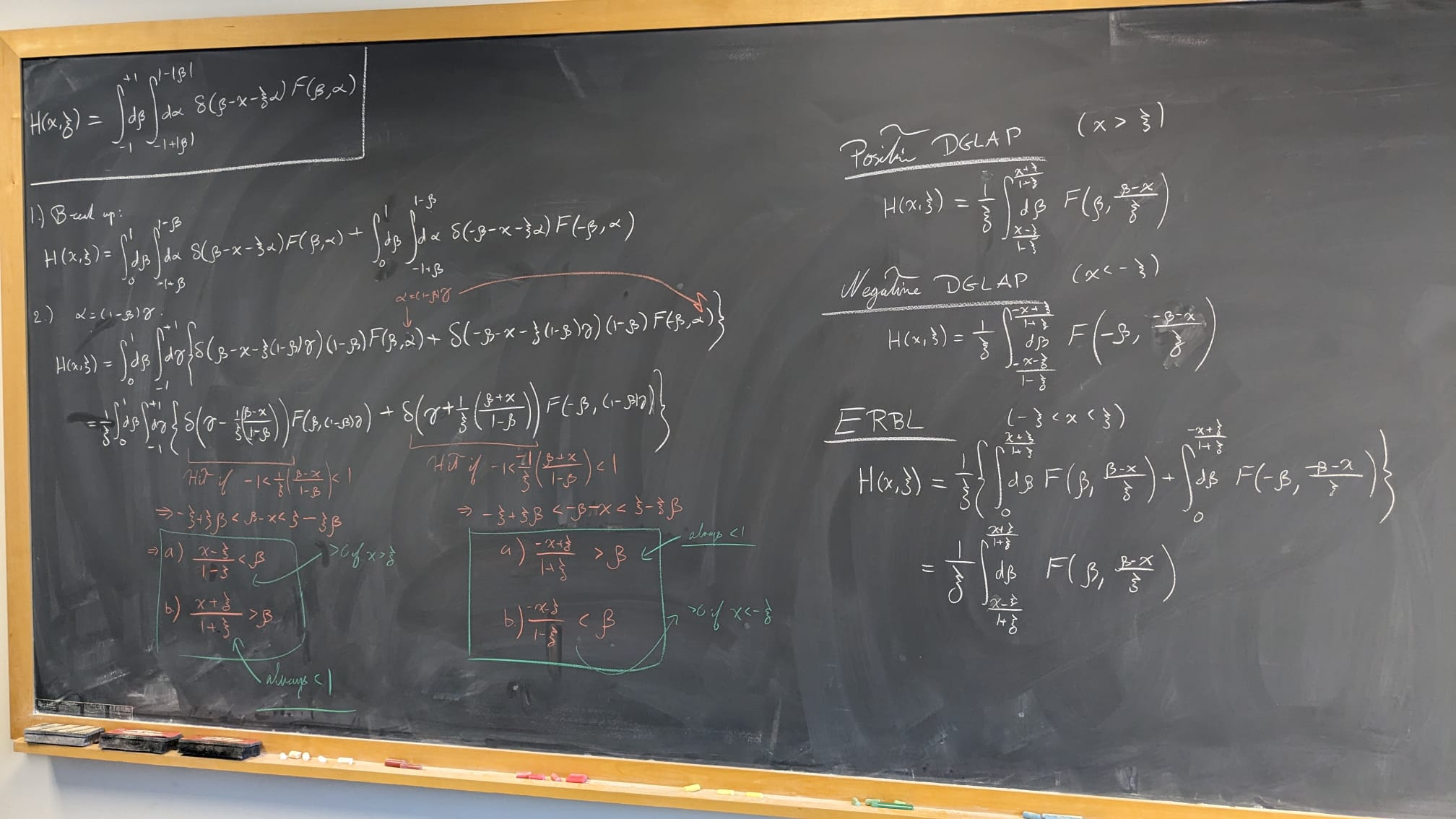

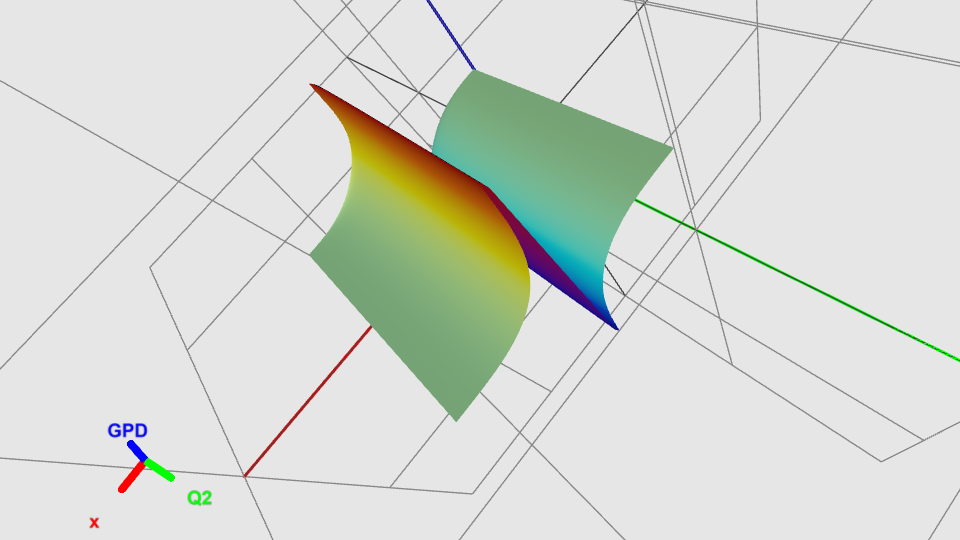

The multi-dimensional internal structure of protons and neutrons in terms of quarks and gluons is encoded in Quantum Correlation Functions (QCFs). The QuantOm Collaboration is developing a state-of-the-art inference framework to extract these QCFs from experimental data taken at particle accelerator facilities worldwide, including Jefferson Lab (JLab) and the upcoming Electron-Ion Collider (EIC).

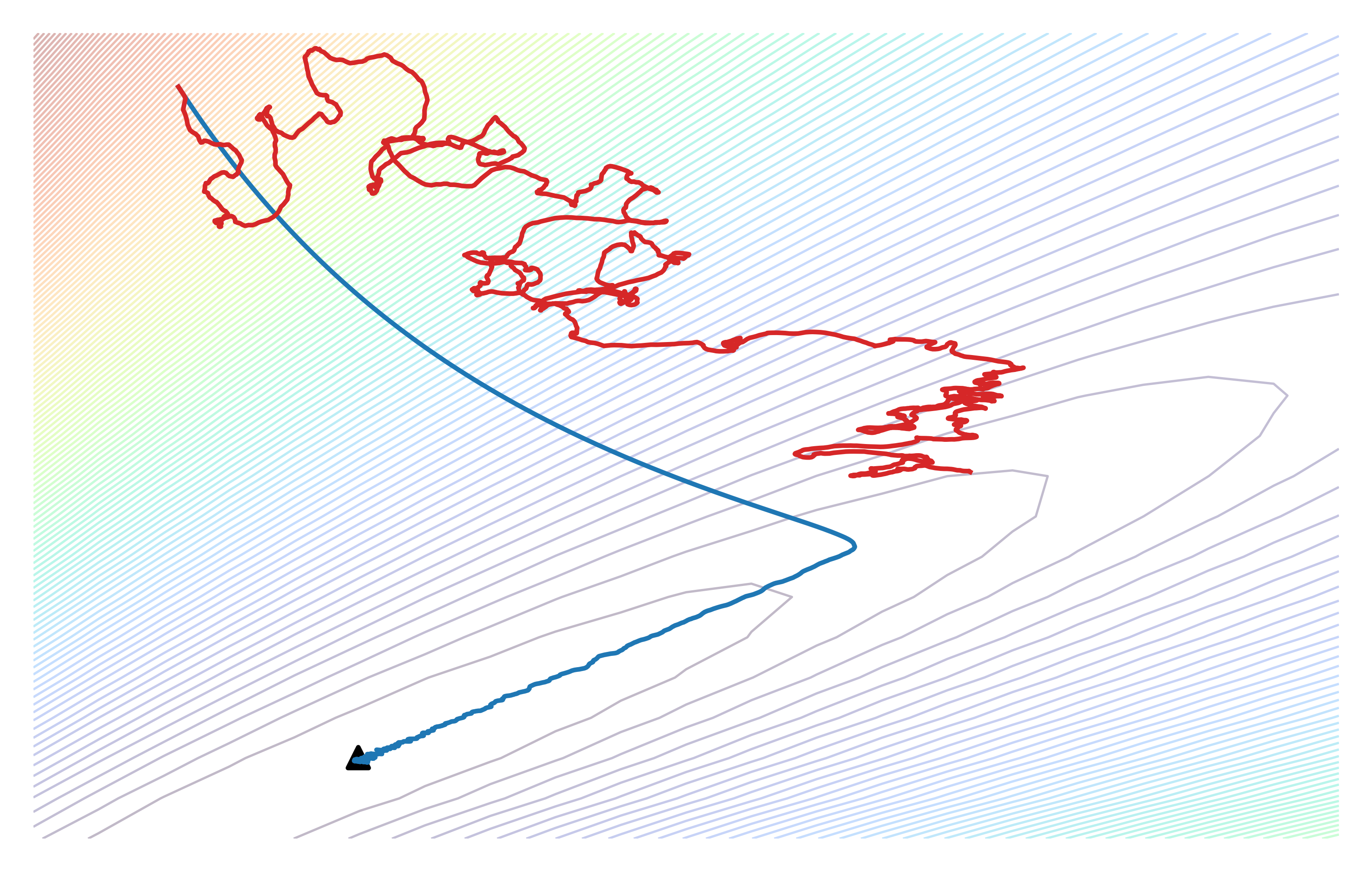

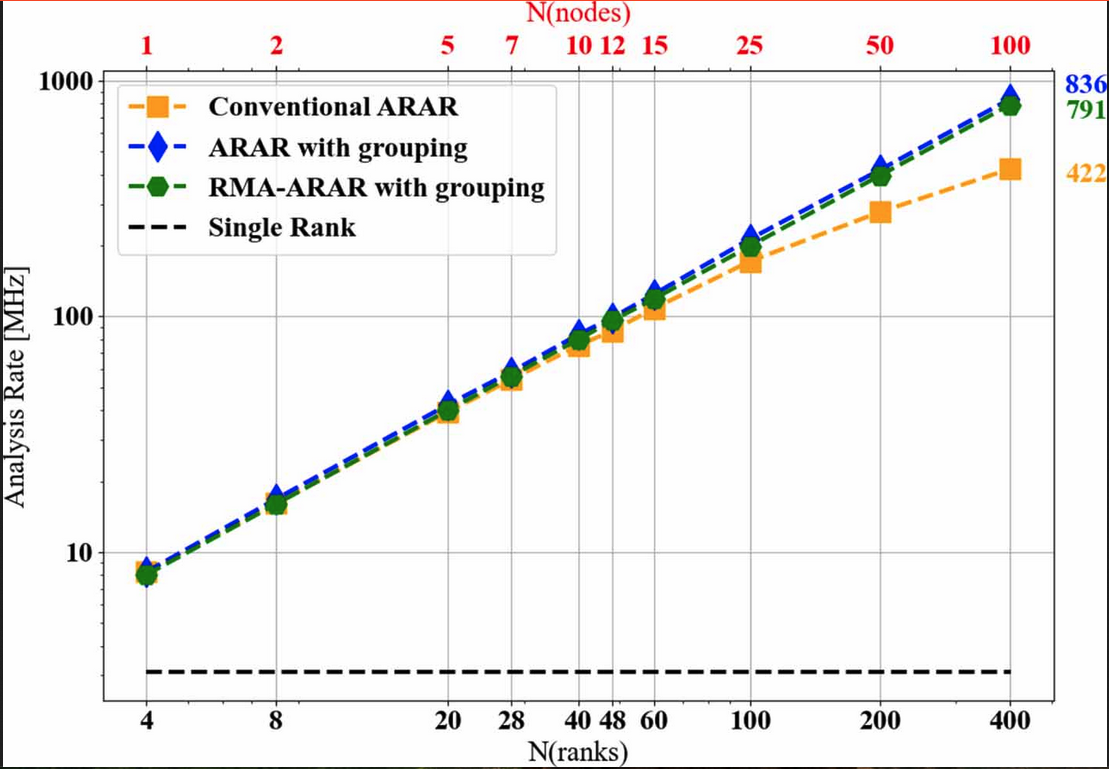

Our approach extends traditional histogram-based methods by incorporating event-level analysis, which preserves the full richness and maximal amount of information contained in the experimental data. In doing so, detector effects are included via folding in contrast to the traditional unfolding methods. This requires unifying modern theory frameworks based on QCD factorization theorems with sophisticated simulations of experimental detectors into a single pipeline. This provides a workflow to robustly incorporate both theoretical and experimental effects, such as radiative corrections and quantum-mechanical interference between real and virtual processes, on equal footing.

Central to our methodology is the use of advanced data science and artificial intelligence techniques. These tools enable the extraction of QCFs in a manner that is scalable and fully auto-differentiable. As a byproduct of our research, the QuantOm Collaboration is developing and releasing world-class physics software packages for computing QCFs and related observables, and for their inference from experimental event-level data.